A few months ago, philz1337x released an open-source Upscaler/Enhancer software called Clarity Upscaler. This innovative tool leverages Stable Diffusion 1.5 and employs advanced techniques to achieve impressive results. Intrigued by its capabilities, we reproduced it using the Refiners library. We aimed to create a version that’s easier to use and more customizable for integration into various projects. You can try it out on our HuggingFace Space.

Upscaler or Enhancer? Fidelity vs. quality trade-off 🔗

The Clarity AI Upscaler is a hybrid tool that functions as an upscaler and an enhancer. It utilizes the Juggernaut finetuned model to recreate image details through a partial denoise process. This approach is often called “image-to-image” processing instead of “text-to-image” generation, although it’s essentially a variant of the same underlying process. This approach can sometimes introduce new details absent in the original image, which can be problematic for some applications. The trade-off between fidelity and quality is a crucial consideration when using this tool.

To illustrate the effects of each step, we’ll use the following image from Kara Eads on Unsplash that we downscaled to 768x512 and applied a slight blur to simulate a low-resolution image.

A key parameter in this process is what Clarity AI Upscaler calls “creativity,” which is the diffusion strength. This parameter controls the balance between recreating existing details and generating new content. Let’s explore how different diffusion strengths affect the output:

This is Clarity’s default and the result is very similar to the original image, although you can see some details have been changed:

At 0.6 diffusion strength, the resulting image is very different from the original and cannot be considered a faithful representation of the original image:

The choice of diffusion strength represents a trade-off between fidelity (faithfulness to the original image) and quality (introduction of new details). Finding the right balance is crucial for achieving optimal results.

Targeting Large Images 🔗

Naive Upscaling 🔗

The most straightforward approach to producing a large image is to upsample the low-resolution version before applying the enhancement process. While this initially reduces image quality, the enhancer then works to recreate details in the larger image.

But we’re quickly confronted with the model’s limits. It’s not designed to handle large images and will struggle to recreate the details in a 2k image. Indeed, the Juggernaut finetune of Stable Diffusion 1.5 has been trained on images with a maximum resolution of around 1k. Also, the required memory scales quadratically with the image size, so processing a 2k image requires around 4 times more memory than a 1k image.

MultiDiffusion For Larger Images 🔗

We implement the tiled diffusion process introduced in the MultiDiffusion paper to overcome the limitations of processing large images. This innovative technique involves:

- Splitting the image into manageable tiles.

- Enhancing each tile separately.

- Merging the enhanced tiles back together by blending the overlapping regions at each denoising step.

This approach allows the model to focus on the details within each tile without losing sight of the global structure. Clarity uses 896x1152 pixel tiles, but you can tune and experiment with this parameter. Smaller tiles tend to enhance details more aggressively, sometimes at the expense of overall image coherence and hallucinating details that weren’t present in the original image. The tile size parameter is called “fractality” in the Clarity AI Upscaler to reflect that using smaller tiles makes the diffusion generate small intricate details.

Advanced Upscaling: Enhancing the Starting Point 🔗

We can use a specialized model for the initial upscaling to improve results further instead of using a simple algorithm. The Clarity pipeline employs the 4x-UltraSharp ESRGAN model, which is trained to upscale images by a factor of 4 and produces exceptionally sharp results. However, we opt for the original open-source ESRGAN in our reproduction due to licensing restrictions. While the results may be slightly less sharp, they still significantly improve naive upscaling methods.

For those interested in comparing various upscaling models, we recommend Philip Hofmann’s excellent Interactive Visual Comparison of Upscaling Models.

Generating New Details: The Tile ControlNet 🔗

To further improve our results, we incorporate the ControlNet Tile developed by the renowned Lvmin. This powerful tool serves two crucial functions:

- It generates new details while ignoring existing ones in the image.

- It enforces the global structure of the original image.

The strength of this ControlNet is aptly named “resemblance,” as it determines how closely the output adheres to the original image’s structure. ControlNet uses a reference image to guide the diffusion process; in our case, we use the pre-upscaled version of the target image as the guide. By applying the ControlNet Tile to each MultiDiffusion tile, we achieve a balance between detail enhancement and structural integrity.

Where the MultiDiffusion output seems to be too smooth and lacking in texture, the ControlNet Tile adds a sharper, more detailed appearance to the image:

Final Tweaks for Optimal Results 🔗

To push the quality of our outputs even further, these additional techniques taken from Clarity AI are needed:

ControlNet Scale Decay 🔗

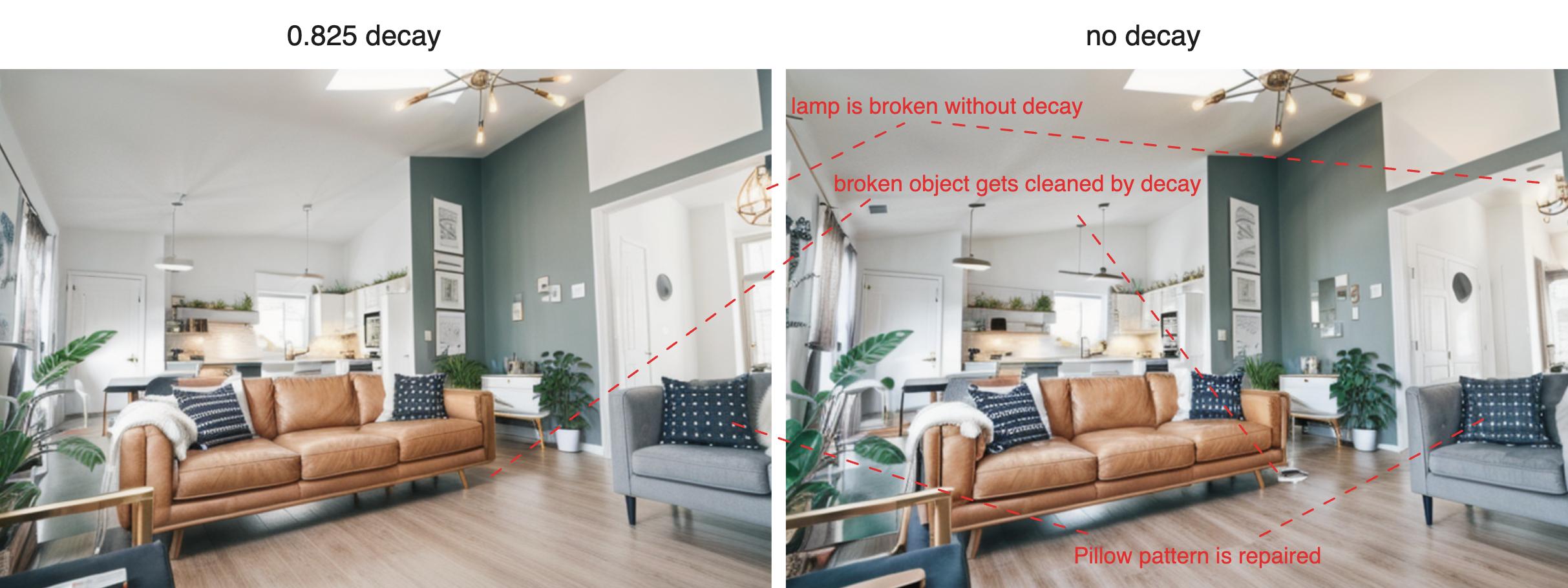

We implement a feature similar to SD-Webui’s “control mode” option set to “Prompt is more important” that applies an exponential decay factor of 0.825 to reduce the ControlNet’s influence, starting from the last block and moving toward the first. This subtle adjustment allows the model to recreate details creatively while maintaining structural guidance.

The difference is subtle but noticeable. The ControlNet Tile without decay often overdoes it and hallucinates unnecessary details, whereas the decayed version helps repair broken details and adds a more natural look to the image.

Leveraging LoRAs and Negative Embeddings 🔗

We incorporate two key LoRAs (Low-Rank Adaptations) selected by Clarity to enhance our base model:

- SDXLrender: This LoRA addresses Stable Diffusion 1.5’s tendency to produce blurry images compared to Stable Diffusion XL.

- Add More Details: This LoRA enhances image details, ensuring our output is as sharp as possible.

Additionally, we use the Juggernaut Negative Embedding to refine our results further. This technique optimizes the representation of a new “word” added to the negative prompt, improving output quality.

Multi-Stage Upscaling 🔗

As the Clarity pipeline does, we employ a multi-stage upscaling process for significant enlargements (e.g., 4x or 8x). Instead of attempting a large upscale, we perform multiple smaller upscales (typically 2x each). This multi-stage process allows the model to focus on details at each stage without losing sight of the overall structure. Also, the diffusion strength is reduced by 20% at each stage to prevent too much creativity at later stages that would cause texture hallucinations.

Takeaways 🔗

Through careful reproduction, we’ve created a standalone Python version of the Clarity AI Upscaler using the Refiners library that offers high-quality results and greater flexibility for integration in various projects. By understanding and implementing these advanced techniques, we can push the boundaries of image enhancement and upscaling, opening up new possibilities for visual content creation and restoration.

Here is a side-by-side comparison of the outputs from the Clarity AI Upscaler’s Replicate Space with default parameters and our Refiners-based reproduction:

There are still some differences between the two outputs. Here are some possible reasons: we weren’t able to reproduce the random state of the Clarity AI Upscaler, and therefore, the results may vary slightly because of that; also, the Clarity AI Upscaler outputs look crisper and have more contrast, but looks less natural than our Refiners-based reproduction.

Stay tuned for a detailed guide on how to use and extend this new tool, which we will publish soon in Refiners’ documentation.